4Percent | The proportion of a company's annual revenue that it may be fined as a result of data compliance violations under GDPR.https://gdpr-info.eu/ |

AI can now write sophisticated documents like legal briefs and case file summaries—but in order to do this, it must be provided the relevant confidential data.

AI has been on the horizon for a number of years now, gradually increasing in prominence. In 2023, however, ChatGPT and Bard took the market by storm, becoming some of the fastest-growing applications in computing history as it became clear to users just how significant their skill sets were.

The generative AI chat platforms were quickly put to to work to read and summarize reports, write letters and legal briefs, formulate business plans, analyze and convert data, and provide possible advice on sticky technical problems across a number of fields. They proved surprisingly competent at these tasks, able to take on a wide variety of problems and provide complete or nearly complete solutions, documents, letters, and so on very quickly.

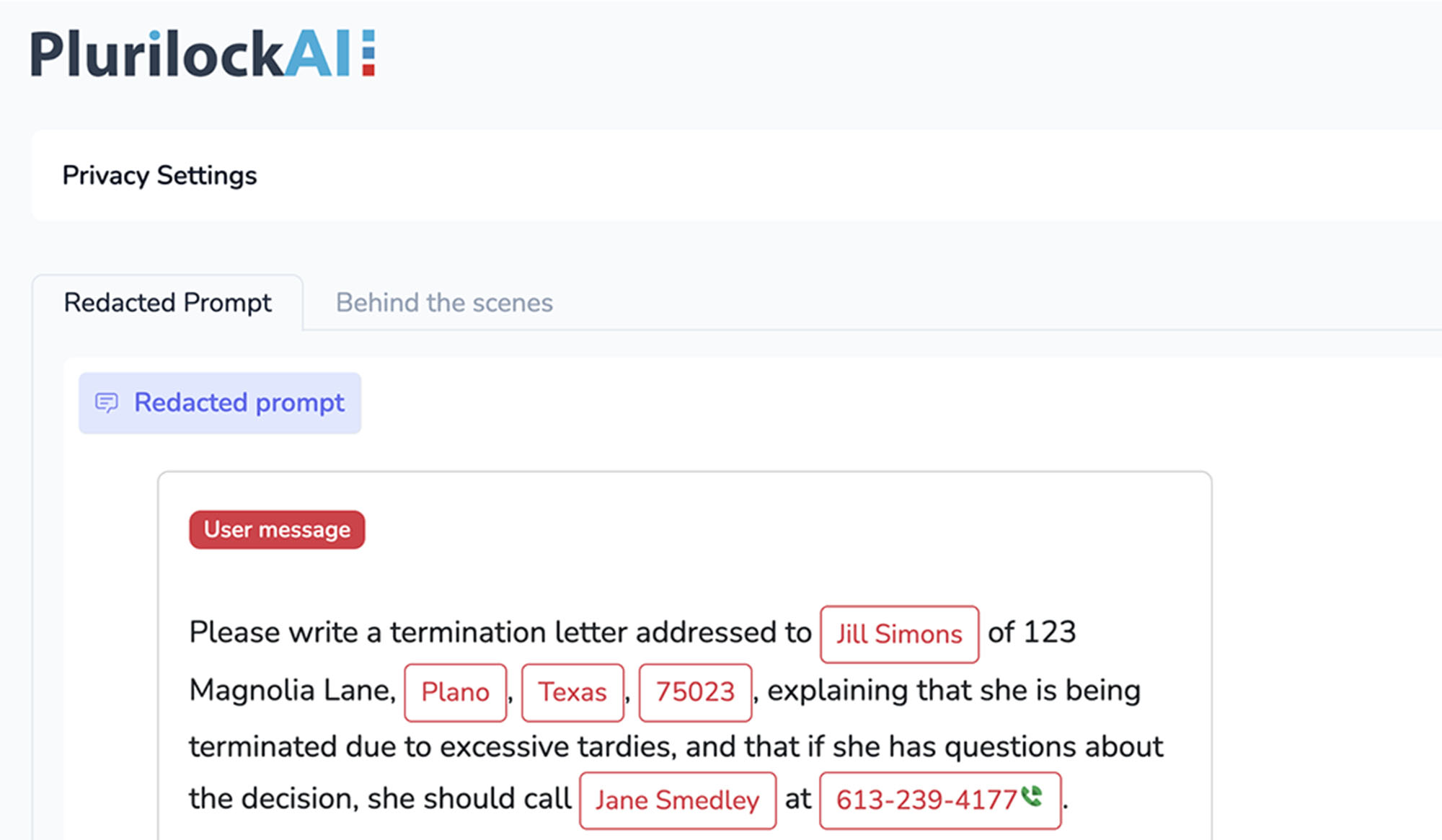

Lost in the furor, however, was the fact that in having AI do this work, users are providing AI systems with the necessary background or source data—the internal documents to summarize, the employee files to use in writing employee evaluations, the medical details in case histories—to complete the requested work. Much of this data is confidential or must not be shared.

In some ways, the relative invisibility of this massive, global data catastrophe results from the unique nature of AI. Users are relatively good at not sharing confidential data with strangers or human parties that aren't authorized to see it. Users have traditionally, however, been far less careful about software; shadow IT has long been a problem for IT teams.

The difference in the case of AI is that AI systems are more like people than like software; they learn and they share. And since they are capable of performing much more complex tasks much more comprehensively, the data provided to them may be of a different quality or quantity than the data used in shadow IT adoptions.

Just as importantly, the shockingly rapid adoption of ChatGPT and Bard caught many companies flat-footed, without sufficient governance or any AI use policy in place. The risks are not small; incidents of AI systems learning from data and revealing this data to other, unrelated users have affected companies as large as Amazon, and in the case of private data governed by standards like GDPR, the consequences for noncompliant data handling can be severe—as large as 4% of annual revenue.

For this reasons, companies are racing to adopt AI governance and to find technical solutions able to prevent the leakage of confidential data to AI as users provide systems like ChatGPT and Bard with AI prompts.

Need AI Data Leaks solutions?

Need AI Data Leaks solutions?Plurilock offers a full line of industry-leading cybersecurity, technology, and services solutions for business and government.

Talk to us today.

Copyright © 2025 Plurilock Security Inc.