Key Points

- ChatGPT is an exciting technology that has made employees more productive than ever before

- But to get ChatGPT to perform useful tasks, it must be prompted with questions and background information

- Often this comes in the form of data that employees wouldn't normally share with a stranger

- This takes company data out of company control and hands it to ChatGPT

Quick Read

In 2023, generative AI went mainstream and for the first time ever, employees at companies around the world were able to put a general-purpose AI platform to work performing real tasks, productively.

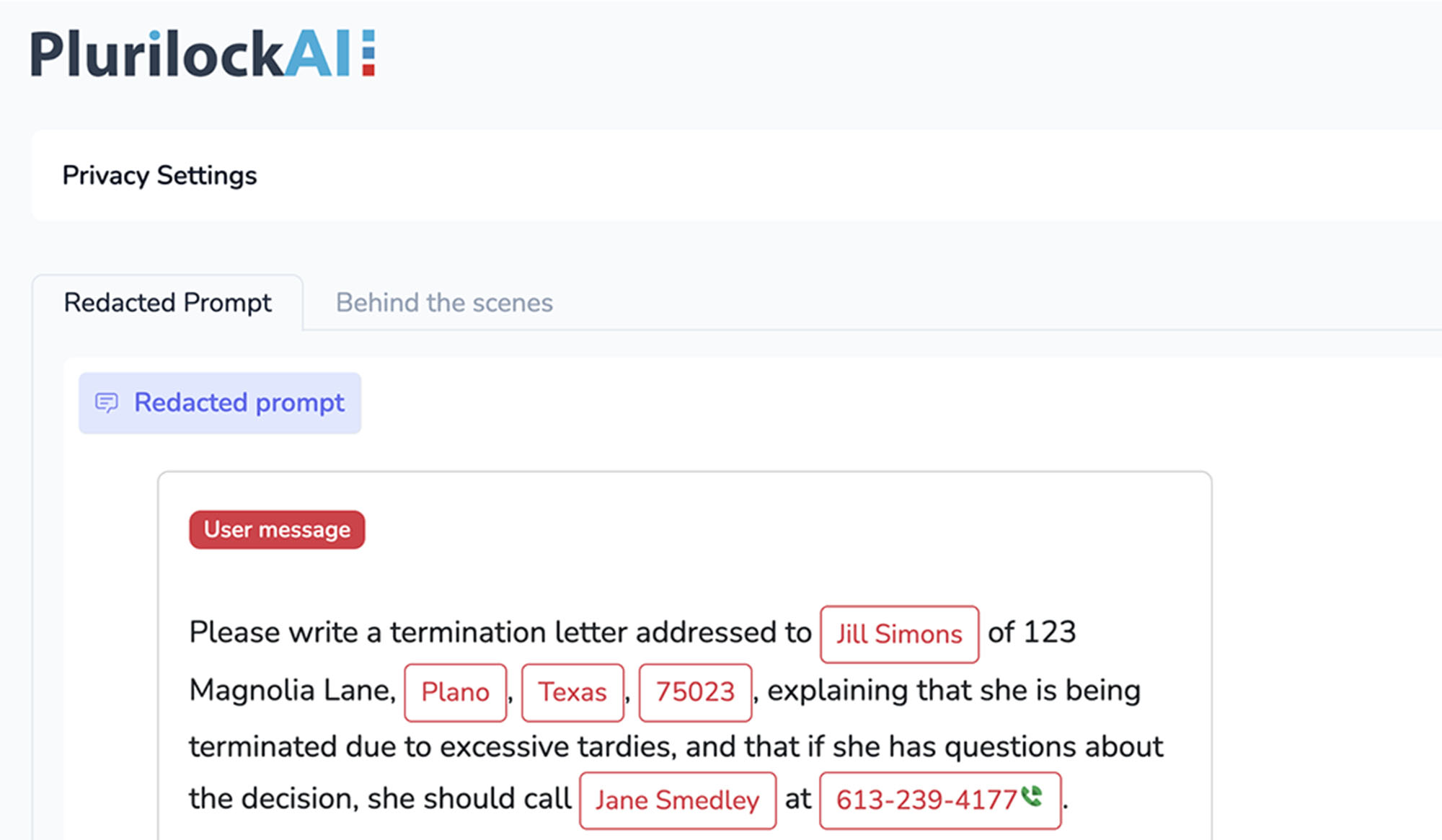

This all came with a catch, however—like a human assistant, ChatGPT can only perform a task when it has all of the information needed to do so. And this has meant that as employees ask ChatGPT to write letters, analyze reports, run numbers, summarize filings, and perform other office tasks, it is being provided with data that would normally never be shared with someone that isn't a company employee.

The risks of concern run the gamut from the obvious concern that ChatGPT might "learn" from sensitive company data and recall it later to another user entirely, to the fact that providing much of this data to a third party (which is what ChatGPT is at the end of the day) runs afoul of compliance requirements.

A number of solutions have emerged in the time since ChatGPT's explosion into the mainstream, many of them ad-hoc. At the simplest level, some organizations have simply banned ChatGPT use and blocked ChatGPT on their networks. Others have managed to shoehorn security controls for ChatGPT into existing DLP or network security tools.

More elegant solutions, like Plurilock AI PromptGuard, intelligently analyze the interaction between an employee and ChatGPT and then "clean up" the communication, hiding sensitive data from ChatGPT before ChatGPT is able to see it. As a solution, this is generally superior to network blocks because it enables continued AI productivity and avoids creating incentives for users to try to work around controls.

Need AI Safety solutions?

Need AI Safety solutions?

We can help!

Plurilock offers a full line of industry-leading cybersecurity, technology, and services solutions for business and government.

Talk to us today.

Thanks for reaching out! A Plurilock representative will contact you shortly.

What Plurilock Offers

More to Know

Firewall Rules Aren't Enough

Firewall rules are good at blocking traffic that you control, but AI is a compelling technology—compelling enough to send users looking for workarounds, whether that's running queries at home, ducking out to the coffee shop WiFi with a laptop, or even having someone else run a prompt for them.

Neither is Traditional DLP

The problem with jury rigging a DLP platform or network traffic filter of some kind to detect bad prompts is that they still simply interrupt the user and say "nope." They don't provide a way for the user to accomplish the work they're trying to accomplish.