Generative AI has seen explosive growth in popularity this year. Platforms like ChatGPT and Bard are quickly becoming go-to tools for everyday work—at the same time that they have become problems for information technology and compliance teams. A new term is creeping into the corporate vocabulary as a result: “shadow AI.”

Among other things, “shadow AI” reflects companies’ concerns that employees using AI systems may:

-

Leak confidential or sensitive data to AI systems as they provide AI prompts or input

-

Leak confidential or sensitive data to AI systems inadvertently by using other software

-

Receive output or instructions from AI that are not accurate or sound, but use or act on them anyway

that has added AI-driven features

In other words, shadow AI shares much with the term “shadow IT,” referring to the use of AI systems without the awareness or approval of the company, or of those within the company tasked with managing AI use and access, but adds new layers of meaning and concern specific to AI systems.

Using ChatGPT, Bard, or Other Bots Without Permission

In the most obvious case, it’s shadow AI when an employee uses ChatGPT, Bard, or another AI chatbot in violation of access policy or other governance guidelines.

And in the same spirit as the term “shadow IT,” we’d also tend to call it shadow AI in the less egregious case—when an employee simply begins to use AI without the awareness anyone at the organization, even if there is no specific prohibition in place.

Given the productivity gains that AI can provide, we expect this kind of shadow AI to become a bigger and bigger problem over time—one that will likely prove just as resistant to a complete solution for simple human reasons.

To combat this kind of shadow AI, companies should come to an understanding of the risks associated with and guardrails needed for AI in corporate settings, and at the very least adopt basic governance and policies for AI that are communicated clearly to employees.

Using AI Inadvertently in Other Software Applications

Given the incredible user base explosion that ChatGPT saw during early 2023, and the amount of AI coverage in the media—not to mention the ready set of capabilities that LLMs and other generative AI platforms offer—it was inevitable that software makers would rapidly find ways to integrate AI into their offerings.

This has happened at a rapid pace throughout 2023, with InvGate reporting that 92 percent of the top technology companies have announced or are working on AI integrations. What we are seeing is a diffusion of AI capabilities—and thus of inadvertent input into AI systems—across the entire technology industry.

We expect this to become a bigger and bigger problem as well, given that the world today runs on software, and software will increasingly mean “AI.”

For the moment, technology and compliance teams are going to have to collaborate to combat this problem, as the best defense is currently a careful review of software before use, including:

-

Which capabilities or features are “AI-enabled”

-

What the nature of the AI enablement is (which underlying platform, what data is sent)

-

Whether undesired AI capabilities and data collection can be managed or shut off

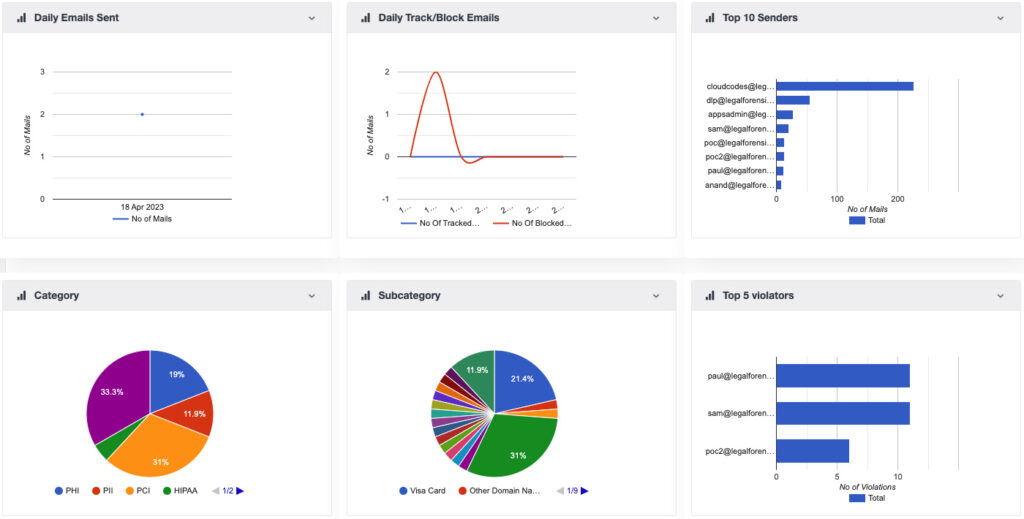

The best technology answer currently available to support governance of this kind probably lies in endpoint-based data loss prevention (DLP) tools; due to the variety and complexity of the integrations already in evidence, it’s unclear when an effective purpose-specific tool to mitigate against this kind of shadow AI will come to market.

The bigger picture here is that in this case, shadow AI becomes another form of shadow IT—and many of the same strategies will have to be applied to combat it, given that employees will tend to make use of shadow AI in many of the same inadvertent ways they have long made use of shadow IT—unless technology teams are attentive and engaged enough to detect and stop them.

Unsound AI Output Leading to Unsound Actions

Shadow AI differs most fundamentally from the basic sensibility of shadow IT in that AI changes the contours of employee work in ways that other technology doesn’t. Most importantly, AI can provide instructions or actionable information on the fly that has not been vetted by anyone beyond the AI itself, while the output from traditional information technology systems is generally fairly structured and predictable.

More simply, no one is likely to be surprised by the result when Excel plots a chart from input data. Not so with AI; from the user’s perspective, the old rule “garbage in, garbage out” no longer applies. AI systems are understood now to regularly “hallucinate” (to provide bad, yet persuasive and grammatical, answers). Recall the attorney whose AI-written brief was eloquent, yet referenced court decisions that the AI had fabricated.

This implies a novel, output-centric sense of the term “shadow AI.” Companies are be affected by shadow AI to the extent that they rely on “artificially intelligent” data or output in their strategy, tactics and decisions, or day-to-day work in ways that they don’t fully understand or anticipate—whether or not the actual AI use was known of and approved.

This is likely the deepest of the emerging AI problems, one whose resolution will involve new kinds of training and awareness that will be difficult to address with simple policy or software tools. But as a first step, companies should at least begin to address this shadow AI problem with policy instruction in the employee handbook: an admonition to employees that while AI is powerful, it is never to be trusted, in keeping with the zero trust posture that many organizations have adopted.

Shadow AI Is No Longer the Problem of the Future

Given the adoption and integration data that we have on AI, it’s clear that as a global economy we have entered the AI age—in much the same way that we entered the information age in the last decades of the 20th century.

And in much the same way that the problem of shadow IT has bedeviled information technology and compliance teams for many years now, shadow AI seems destined to become one of the key problems and governance targets of the future.

Our ability to limit and control shadow AI—both through policy and through emerging technology products like Plurilock PromptGuard —will dictate much about whether the “AI revolution” is ultimately as productive and beneficial for the companies of today as the “IT revolution” was for companies of the past. ■